The Illusion of Understanding

And why AI art feels hollow... Yes, I'm talking about AI again. Leave me alone.

Say, have you ever heard of the “Chinese room” thought experiment by John Searle?

I know, I know… This feels like one of those moments in Zero Escape games where they suddenly talk about some random deep topic. In fact, now that I think about it, this might be one of those topics discussed in one of those games. My memory is a bit fuzzy, but whatever, bear with me here.

Imagine waking up lying on the floor, in a dimly lit room you don't recognize. The walls are empty, the air is still, and there are no windows, only a single locked door. In the center of the room sits a chair, a table, a pen, a stack of papers, and a massive book.

Suddenly you hear a faint noise. Turns out someone has slipped a sheet of paper under the door. You grab it in confusion, and it's covered in Chinese characters you don't recognize. In fact, you're not even sure if it's Chinese, it could be Japanese or Korean for all you know.

You then sit on the chair and open the book. Turns out it's an insanely detailed manual that tells you exactly what Chinese symbols to write down in response to the paper you just got. Carefully, you match the unfamiliar squiggles to the book's instructions and slide back what would seem like a perfectly fluent answer written in Mandarin to a Mandarin speaker.

More papers come. More and more and more… Each contains a question, and what you're doing is to write responses to them. To the Chinese person outside, it looks like the room speaks fluent Mandarin. Now the question is...do you actually speak Mandarin in this scenario?

The obvious answer is no. You're just following instructions, and have no grasp of the actual meaning behind the symbols. And this raises another question: What does it mean to truly understand something?

I'm sure most people would agree that simply following a set of instructions isn't the same thing as truly understanding something. And generative AI models (think of ChatGPT), kinda like the person in the room, are just following instructions.

I think this is part of the reason why people are so skeptical of stuff generated by these AI models. With a human, you can normally trust that they understand what they're saying, with an AI, however, this seems almost impossible, in a weird intuitive level.

But what exactly is missing here? What do humans have that generative AI models don't?

The Intentionality

While researching the Chinese Room argument, I ended up going down a rabbit hole, and came across this philosophical concept called intentionality. And no, it's not the same thing as intention, it's moreso about the ability to represent or stand for something.

For example, when I say “Sonic Forces is a bad game”, my sentence is represents my own evaluation of the game Sonic Forces. The sentence has intentionality because it actually stands for something.

Now, let's just say I found some random dude who knows jack shit about video games, and told him to repeat the same sentence. Does what he say stand for anything? Afterall, this guy has never even heard of Sonic Forces before. What understanding could he possibly have about it?

Let's dial it up a notch. Let's say we made him say it in German instead: “Sonic Forces ist ein gutes Spiel”. However, this person we found doesn't speak German. He has no idea what he just said, removing all the possible doubt of intentionality behind his words.

In fact, I lied to you. That German sentence actually meant “Sonic Forces is a good game”! Gotcha!

Now, let's say we asked an LLM (large language model) its opinion on Sonic Forces. Regardless of what it might say, it's not going to be based on any internal belief about the game in question, it's just going to be a mechanical reaction.

This is because LLMs operate through statistical associations between words and phrases. They are trained on massive datasets (training data) composed of things like books, articles, research papers, Reddit threads, websites, and so on. Through this training, they learn to detect patterns and relationships between words, based on how often and in what contexts they appear together. They are then all assigned numbers that facilitate this relationship. This is an oversimplification of the whole thing, but you get it.

This means that, yes, LLMs do not “think” in the human sense. Their responses aren't grounded in beliefs or understanding, there's no “mental image” of Sonic Forces when an LLM generates a sentence about it, and there are simply no opinions in play. It just says what is statistically the most likely thing someone would say, given its training data. How is this any more intentional than that one guy saying that Sonic Forces is bad (or good) in German?

Now, it's true that some LLMs do give different responses than others, and seemingly to hold different values. Grok, for example, is far more likely to answer questions in a less politically correct way compared to ChatGPT. This isn't because they hold different opinions, though. It's rather because us humans can control what goes into the “minds” of these LLMs. We choose which information to feed them, and can finetune them to favor different types of information. The LLM doesn't know what it's reacting to regardless.

I'm sure most people haven't read, or even consciously thought much about this whole “intentionality” thing, but I'm sure it still is something we all grasp in a more intuitive sense. It's just a part of human nature. We instinctively understand that our words need to mean something in order for us to say that we understand them. So whenever we ask an LLM to write a story, many of us already know that the AI is not thinking about what makes a story good or whatever. It's just predicting the next statistically most likely word. And since this process is so unlike ours, we, understandably, approach it with a lot of skepticism.

The Soul

LLMs learn things by reading them from various different sources.

We are different. We learn things by actually experiencing them with all our senses. Many of us can describe what happens when we break a glass full of water, not because we read about it somewhere, but because we were there, right when it happened! But an LLM wasn't. Sure, it can describe what happens when a glass breaks, sometimes with eerie precision, but that's because it's recombining descriptions it found elsewhere. It never lived that moment, and never will. And I think this is an important distinction.

These experiences reflect a person. They are what give a piece of art a meaning. They are the reason why when a human artist paints a shattered glass on the floor, there's often a story behind it. You know, maybe they recall that one time it happened and how the sharp crash broke the silence. Or how that one time they got in trouble because of it.

And their portrayal of the event will depend on those experiences. Maybe the person depicted looks shocked because of the feeling of cold water spilling over their bare feet. Maybe they look worried because of the guilt of ruining a perfectly good glass that doesn't belong to them. Or maybe they look disappointed, because now they have to spend 10 minutes cleaning up all the glass shards. All of this tells us something about the artist, their past experiences, and their way of thinking. Even if we make an LLM with a very sophisticated way of understanding things, it just wouldn't be the same.

Without that lived experience, without the capacity to experience, to feel, to suffer, to wonder, an AI can only ever produce the form of art, not the substance. It can make things that look like paintings, that sound like music, that read like poems. But there is no meaning to them whatsoever. There's no soul.

About 5+ years ago or so, the word “soul” was the biggest buzzword in our friend group. Super Mario Odyssey was regarded as a game with so much soul. Sonic Forces? Not so much. The word was getting tossed around so much that it became instinctive to us. We all knew what we meant, even if we couldn't explicitly define it.

And I feel like the “soul” we were talking about back then, and the “soul” that usually gets brought up whenever the discussion comes to AI are the same.

Super Mario Odyssey was clearly a labor of love. There were so many cute touches that only someone who actually cares about this shit could come up with. Like, remember that mission where there's a lonely guy sitting on a bench, and if you go near him and sit, he gives you a moon? It's one of the simplest missions in the game, yet it's the one that stuck with people the most. Because there's a meaning there. Even if it's not very deep, it says something about loneliness and who Mario is as a character. There's just something human to it.

Sonic Forces, meanwhile, felt like a game that was made more out of obligation. When people talk about it, they don't recall those kinds of meaningful moments, they remember shit like Knuckles's out of touch line about the horrors of war. The intent wasn't to put out a work everyone could be proud of, it was just to put out something, just because the deadline was getting closer. There's no deeper meaning behind them. Tails saying “Tru dat!” doesn't tell us anything about his character.

I think this is why people say “AI art isn't real art”. It's not because they're being elitist or reactionary, it's because they instinctively feel that there's something missing. A gap, a hollow space where the soul would be. And in my opinion it matters way more than the technical skill or the execution.

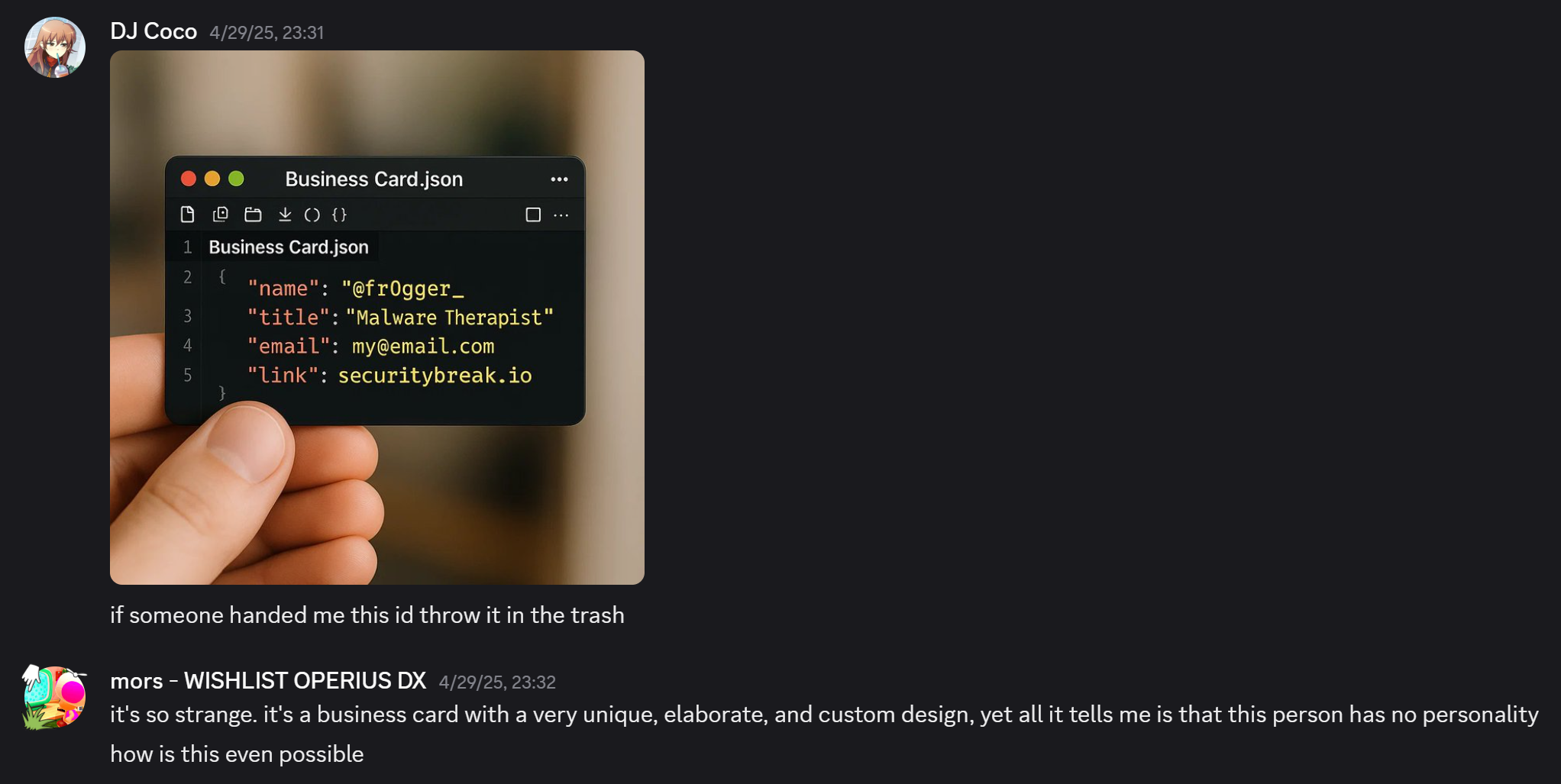

And if you want proof, here's a conversation I recently had with a friend:

Admittedly, I was a bit harsh with my response. I was already in a bit of a pissed mood, so when I saw this “business card” I just gave my unfiltered response.

But then I looked closer.

This is not a real business card. Look at the line numbers, and the inconsistent use of quotes. This image is AI generated. I didn't notice it at first, but still happened to instinctively feel the lack of soul in it. I think that should say something…

But wait for a sec!

Some of you might be asking now: “Mors, there can be both intentionality and soul in AI art! It's all in the prompt!”

Oh boy…

When I write code, even something as boring as a settings menu, I make thousands of tiny decisions. Some of them are conscious, like the general layout or which options I include, but most happen subconsciously. You know, stuff like the way I name things, the spacing I use between the elements, or the small animations I include to give user feedback. It's these micro-decisions that make the thing mine.

The same idea applies to any creative discipline. In drawing, for example, it’s the composition, lighting, color theory, texture, and so on that are micro-decisions. And believe it or not, they all have some intent behind them, even if they aren't all consciously made!

The issue is that human language is inherently too imprecise to fully capture everything, especially in visual mediums. No amount of prompt engineering can convey the countless micro-decisions involved in a work of art. So when you use it, whether if you like it or not, you offload all the micro-decisions to the machine so it can pick the most statistically generic outcome.

On some level, sure, the prompt itself can count as intent. But let's just say that we gave the same prompt to three human artists. We'd have three pieces of work that tell us something different about each of those artists. Their influences, biases, priorities, fears… Then let's say we gave it to three different generative AI models, and we get three results that don't really convey anything beyond the literal meaning of the prompt.

The question now becomes about where to draw the line. One could argue that digital art also causes some of the intent getting lost. After all, unlike traditional media, digital art often lacks visible brush strokes, whose appearance and physical texture can tell us so much about the artist and their creative process.

...honestly? This is one part where I don't have a clear answer. I think it depends on the person. In my personal opinion, though, reducing all the intent to a single line of text is going too far. At that point, even if it can be considered art, it has so little human input in it that, to me, it has almost no value.

Speaking of the process of creation, there is another aspect of real art that, in my opinion, also really matters and doesn't really get brought up often.

The Journey

Making art as a human isn't just about the finished product or the intent, but also about the act of creating itself. Like, creativity is just inherently fun! It's fun to work on something and watch it gradually come to life. It's fun to learn stuff while you're working on a piece, it's fun to gain experience and slowly see your work better. By using AI you're robbing yourself of all that.

And for what? A brief moment of entertainment from seeing what an algorithm did your prompt? I mean, there is nothing wrong with enjoying that, just like how there's nothing wrong with enjoying scrolling through TikTok. But what do you actually gain from it? Does it make you grow in any way? If someone asked you what the creative process felt like, what would you even say?

It's all about, you know, not the destination, but the journey (if we really want to be cliché with our wording).

The Grand Point

As all this generative AI stuff becomes more and more integrated into our daily lives, I think it becomes more and more important to stop and reflect upon what we risk losing when we delegate these creative tasks to them. I will not claim that generative AI cannot ever be useful, in fact while writing this very article I asked it multiple times when I wasn't sure about the grammar I was using. Annoyingly, it kept trying to reword all my sentences instead of strictly fixing those issues, but my point is that you can, in theory, use generative AI in ways that do not reduce the artistic value of your work.

But when it comes to crafting your own art, your own vision, your own expression, your own philosophy, your own worldview...you just cannot do that through AI. It's not your expression at that point, it just belongs to the algorithm. And the vision of the algorithm not only has no intentionality, no meaning, and no soul, but it also robs you of the journey. And that matters.

2026 EDIT: I finally got a blog set up on my personal website, so I'm moving a lot of my more personal posts there. Go take a look!

Share this post

If you like what you just read, share it with your friends or on social media!

Stay in touch!

Sign up for our newsletter to get monthly exclusive newsletter posts, and more!

Comments

Join the discussion on our forum!Keep Reading

The Legend of the Solo Developer

This subreddit that has 100 000 developers on it had one post about a guy who made it, therefore it's definitely the right course of action! What do you mean survivorship bias?

Games Are Made by Individuals

Why is it that you don't know that many directors for games?